Why You Should Avoid Deploying Sklearn Models to Production?

...And here's how to make them deployment friendly.

Sklearn is the go-to library for all sorts of traditional machine learning (ML) tasks.

However, things get pretty concerning if we intend to deploy ANY sklearn-based model.

Why?

Let’s understand this today.

Sklearn models are primarily driven by NumPy, which ALWAYS runs on a single core of a CPU.

This provides massive room for improvement as there is no parallelization, and it naturally becomes a big concern for data teams to let sklearn drive their production systems.

Another major limitation is that scikit-learn models cannot run on GPUs.

GPUs are important because many deployed systems demand lightning-fast predictions.

Yet, being entirely driven by NumPy (which only runs on CPU), sklearn models cannot run on GPUs.

Despite that, there’s a way to enable GPU support in sklearn models and massively speed up inference.

The core idea is to compile a trained traditional ML model (Random forest, for instance) into tensor computations.

Being driven by tensors, the model can seamlessly leverage features provided by deep learning-based inference systems like:

Ease of deployment.

Loading them on hardware accelerators.

Inference speed-ups using GPU support.

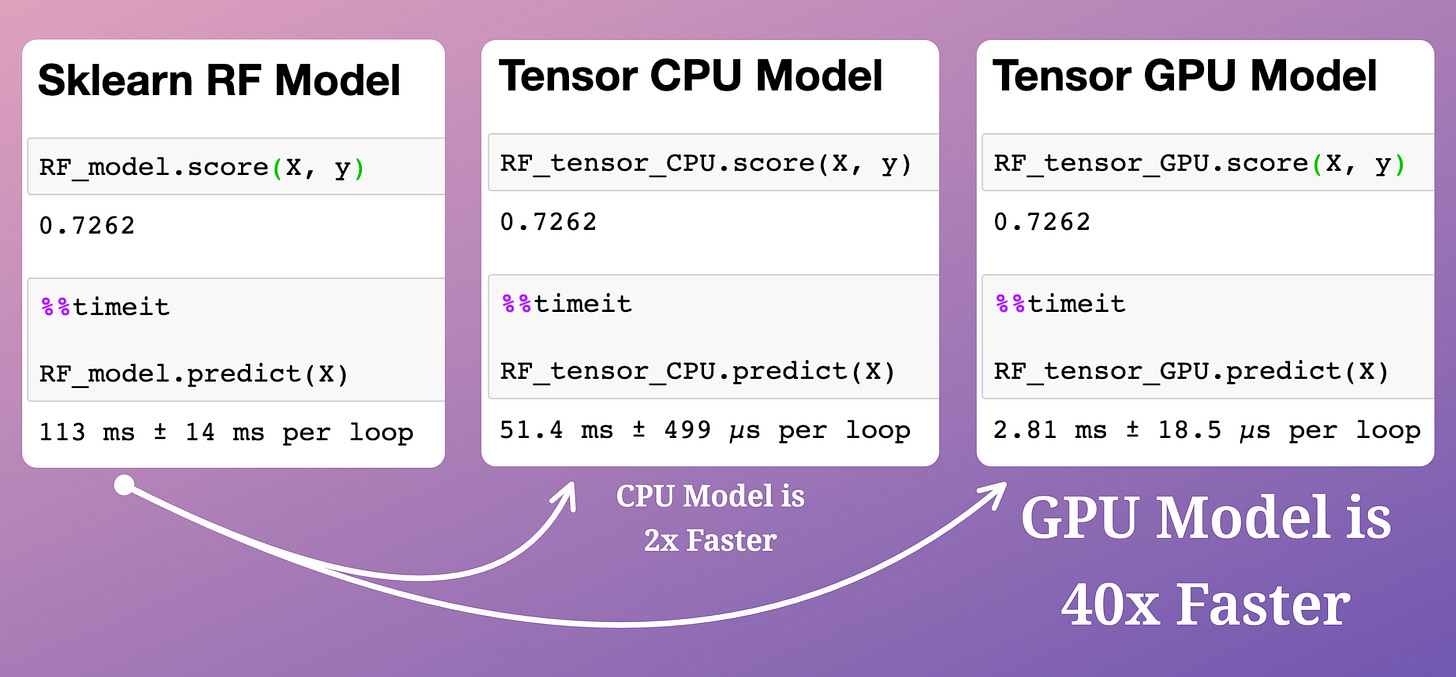

The efficacy of this compilation technique is evident from the image below:

Here, we have trained a random forest model.

The compiled model runs:

Over twice as fast on a CPU.

~40 times faster on a GPU, which is huge.

All models have the same accuracy — indicating no loss of information during compilation.

Now, if you are wondering:

What are these compilation strategies?

How do they work?

How do we implement them?

How do we even represent a random forest model as matrix computations as this makes no intuitive sense?

…then this is precisely what we discussed in a recent machine learning deep dive: Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Inference run-time is a critical business metric in ML deployment.

As enterprises mostly work on tabular datasets, classical ML techniques such as linear models and tree-based ensemble methods are vital in modeling them.

Yet, in the current landscape, one is often compelled to train and deploy deep learning-based models just because they offer optimized matrix operations using tensors that can be loaded on GPUs.

Learning about these compilation techniques and utilizing them has been extremely helpful to me in deploying GPU-supported classical ML models.

Thus, learning about them will be immensely valuable if you envision doing the same.

👉 Interested folks can read it here: Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Hope you will learn something new today :)

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

Thanks for reading!

Very interesting and well explained ! :)

Valuable information! Thanks Avi!